Solution Blueprint for Big Data on the Cloud Proof-Of-Concept

AWS documentation is extremely detailed. So wherever possible, I am going to provide the links to aws documenation/tutorials instead of rewriting the stuff.1. First and foremost we need to have AWS account, have the security details handy and create three S3 buckets (I created mine as bigdatapocinput, bigdatapocoutput and bigdatapocemrlogs). Please follow this detailed Video to get these done AWS Account setup and S3 bucket creation Before Proceeding further, you need to have these things:

- An AWS account is created

- Create a key pair

- Have the details of AccessKey and SecurityKey

- Three S3 buckets (one for input, one for output and one for EMR logs)

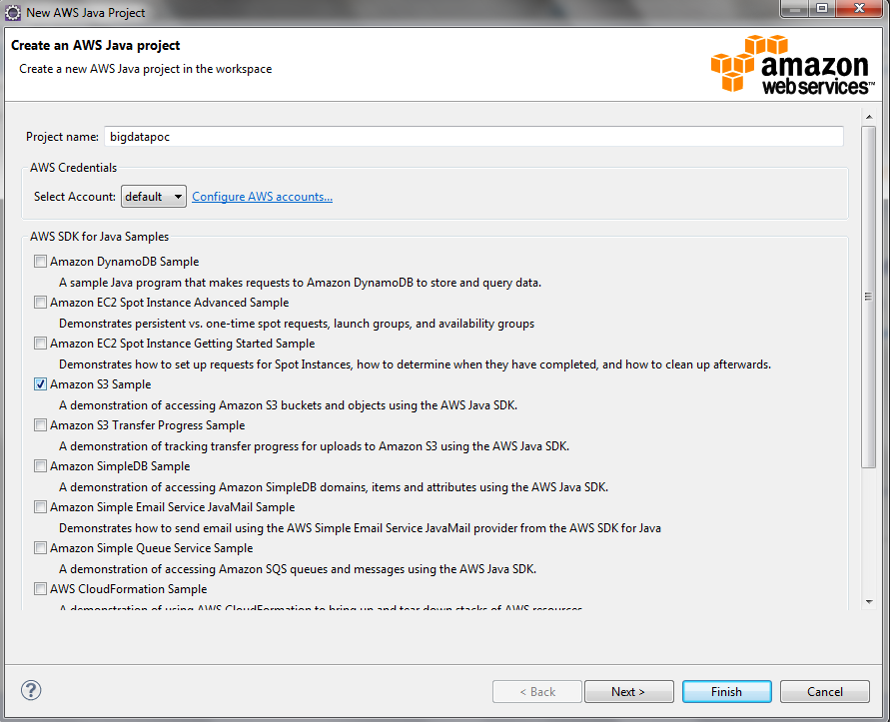

3. Create a new project using sample S3 project

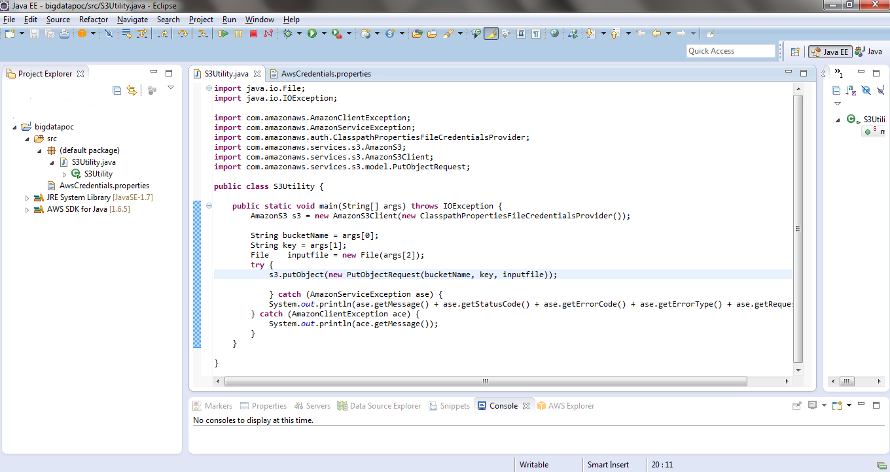

4. Make sure the AWSCredentials.properties in the classpath has the right details of accessKey and secretKey

5. Delete the S3Sample Java class that comes with the template project and create a new one (S3Utility), simplified with the below code.

import java.io.File;

import java.io.IOException;

import com.amazonaws.AmazonClientException;

import com.amazonaws.AmazonServiceException;

import com.amazonaws.auth.ClasspathPropertiesFileCredentialsProvider;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3Client;

import com.amazonaws.services.s3.model.PutObjectRequest;

public class S3Utility {

public static void main(String[] args) throws IOException {

AmazonS3 s3 = new AmazonS3Client(new ClasspathPropertiesFileCredentialsProvider());

String bucketName = args[0];

String key = args[1];

File inputfile = new File(args[2]);

try {

s3.putObject(new PutObjectRequest(bucketName, key, inputfile));

} catch (AmazonServiceException ase) {

System.out.println(ase.getMessage() + ase.getStatusCode() + ase.getErrorCode() + ase.getErrorType() + ase.getRequestId());

} catch (AmazonClientException ace) {

System.out.println(ace.getMessage());

}

}

}

The project should look like this.

6. Export the project as a Runnable JAR file.

7. Get our Data source file (EOD Data) and unzip to the input folder. The path is Bhav copy from BSE – End of the Day file

8. Let's run the S3Utility as the executable JAR is ready, as shown above, three parameters are needed (in order) S3 Bucket name, S3 object key (use the file name) and file path in the local server. As mentioned earlier, I have already downloaded the End-of-Day file, unzipped and stored it in input folder.

So, my execution command is:

praveen@localhost:-$ java -jar bigdatapoc.jar bigdatapocinput/eoddata EQ121113.CSV EQ121113.CSV

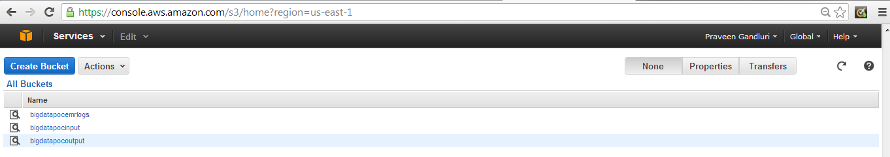

Login to AWS console and verify your upload in the S3 input bucket:

9. Schedule this command through a shell script to execute everyday/every hour etc., based on your input frequency for the Analytics. In my demonstration, I am scheduling this for every evening at 06:00 PM

10.Now, let's create a AWS Data Pipeline to Lauch an EMR and run analytics.

Previous Steps Next Steps